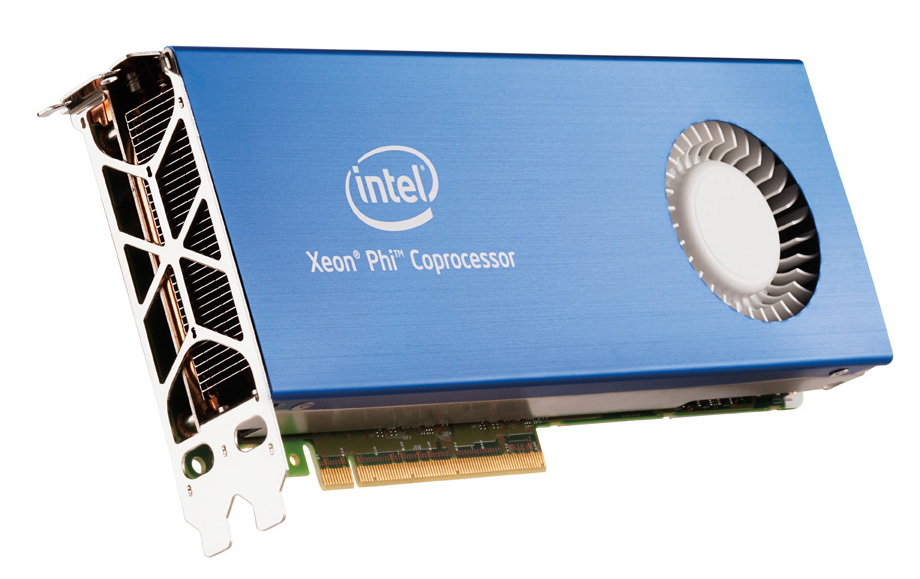

Multinational company Intel is trying very hard to put its new Xeon Phi GPU compute accelerator cards into a good light as most reports show that it only has modest achievements as we’ve revealed here.

Intel’s Xeon Phi is the result of almost a decade of work from the company’s specialists and huge investments into various different projects that culminated with the current implementation.

The reality is that the company is still struggling to reach the 1 TFLOP DP FP64 performance goal and despite the fact that this value was presented in most of Intel’s previous presentations, it is still not an official specification.

Intel is now working with HP at building a new HPC system at National Renewable Energy Laboratory (NREL) and the company claims that it will reach an impressive 1.06 PUE rating once finished.

PUE is short for power usage effectiveness, and a PUE rating of 2.0 depicts a system where for every watt of power used for IT equipment, an additional watt is used in overhead by the cooling systems.

Editor’s Note:

I was involved in designing and building several HPC systems at our Nuclear Research Laboratory and the system power consumption / AC power consumption ratio was an important aspect of our project.

Basically, the situation is as follows: when a server consumes 1000 watts, there will be 1000 watts worth of heat dissipated inside the server room.

That’s quite a lot and ten racks with ten servers each will dissipate an amazing 100 KW worth of heat and that’s more than 50 room heaters that are each blowing with 2 KW of power.

The owner of the server will pay for those 1000 watts consumed by the server, but he will also have to pay for those 1000 watts of heat to be removed from the server room.

A normal AC unit uses roughly 1200 watts of power to remove 1000 watts worth of heat from the room.

The end result is that the server owner gets 1000 watts worth of computing performance, but he has to pay for 2200 watts of energy and that’s a 120% overhead.

Therefore, power consumption in HPC data centers is a very important issue.

Intel’s claim of a PUE rating of 1.06 can’t really be verified right now as the system is not finished, but it can be calculated using the official guidelines.

The main aspect is the fact that such a high level of efficiency can only be achieved by improving the way the cooling system works and, if you have systems that consume modest amounts of heat, the server room will heat up less and slower.

When you have a server room that is heating up slower and contains systems that don’t suddenly dissipate high amounts of heat, you will be able to cool it by other means and not use the usual AC units found in most modest implementations.

Such a high performance system with such a complex array of servers will most certainly use intelligent cooling solutions that will be able to keep the temperature in check with a modest overhead.

We doubt that the system will reach the touted 1.06 PUE value, but we wish Intel and HP good luck.

The conclusion is that the efficiency is not really coming from the Xeon Phi or the Sandy Bridge E processors, but from the way the cooling system is designed, especially when the PUE rating is the main criteria.

Via: Intel Xeon Phi to Power World's Most Power-Efficient HPC Data Center

Tidak ada komentar:

Posting Komentar